AquiLLM

AquiLLM (pronounced ah-quill-em) is a tool that helps researchers manage, search, and interact with their research documents. The goal is to enable teams to access and preserve their collective knowledge, and to enable new group members to get up to speed quickly.

About AquiLLM

Research groups face persistent challenges in capturing, storing, and retrieving knowledge that is distributed across team members. Although structured data intended for analysis and publication is often well managed, much of a group's collective knowledge remains informal, fragmented, or undocumented—often passed down orally through meetings, mentoring, and day-to-day collaboration. This includes private resources such as emails, meeting notes, training materials, and ad hoc documentation. Together, these reflect the group's tacit knowledge—the informal, experience-based expertise that underlies much of their work. Accessing this knowledge can be difficult, requiring significant time and insider understanding. Retrieval-augmented generation (RAG) systems offer promising solutions by enabling users to query and generate responses grounded in relevant source material. However, most current RAG-LLM systems are oriented toward public documents and overlook the privacy concerns of internal research materials. We introduce AquiLLM, a lightweight, modular RAG system designed to meet the needs of research groups. AquiLLM supports varied document types and configurable privacy settings, enabling more effective access to both formal and informal knowledge within scholarly groups.

Developed with Considerations for Research Teams

Focus on the needs of teams rather than just individuals. Also enable control of research data through different options for deployment, including completely localized to cloud-based.

Support for Multiple Types of Data

Upload PDFs, fetch papers from arXiv, import meeting transcripts, scrape webpages, and process handwritten notes.

Natural Language Queries

Engage in context-aware conversations with AI about your document contents using natural language.

Organized Collections

Organize documents into logical collections for focused research projects and streamlined knowledge management. Support for different levels of permission and privacy.

Multiple LLM Providers

Flexible support for commercial LLM providers including Claude, OpenAI, and Gemini or local deployments like Gemma and Llama.

Technical Details

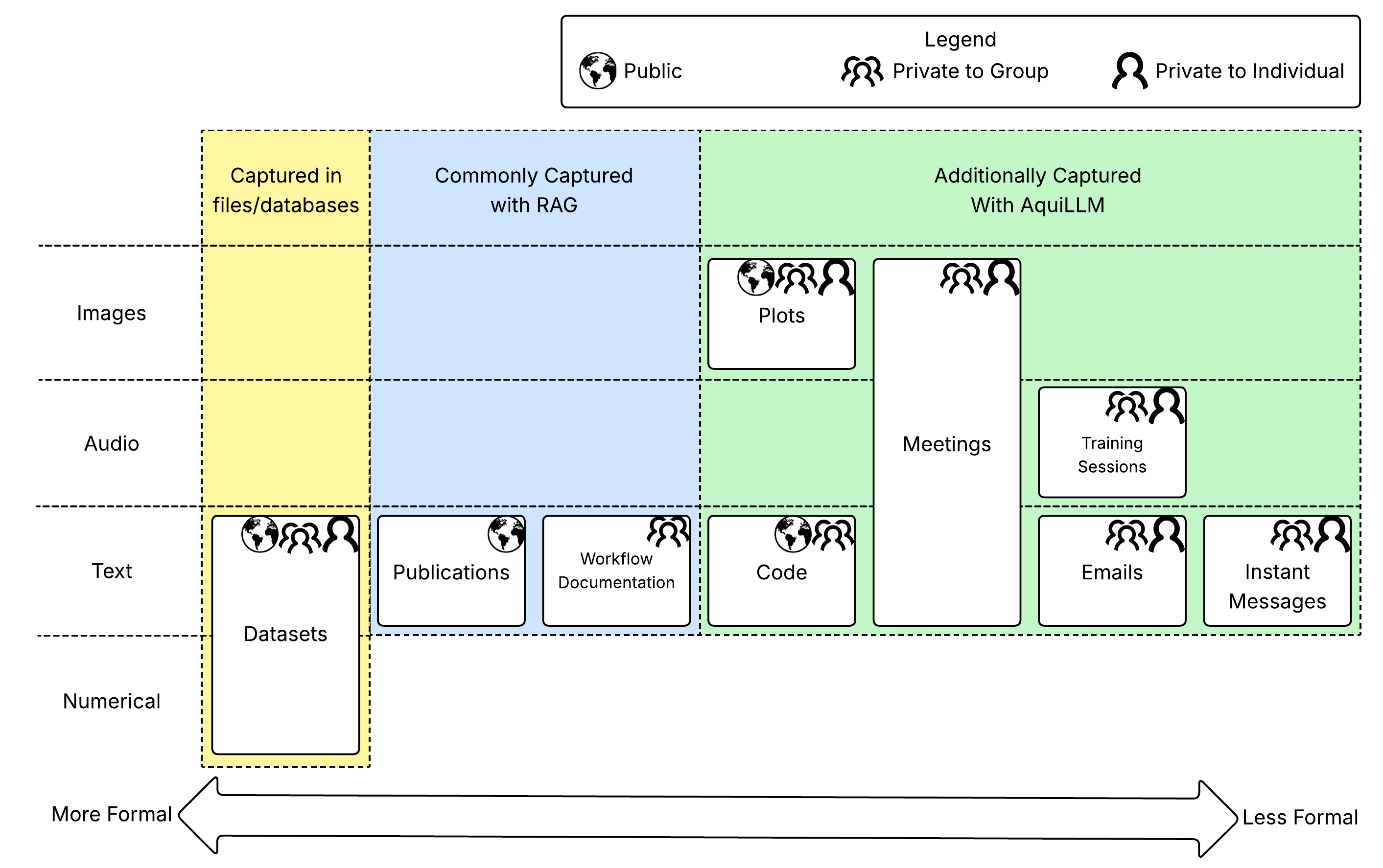

Data Types - Research groups generate a range of data, from publications and pulibc datasets that are formally published to informal data products like presentations, meeting notes, plots, etc. The informal data is just as important for the daily function of groups, but are difficult to capture and used. AquiLLM seeks to integrate both formal and informal data products while respecting the different levels of privacy that maybe necessary with non-public data.

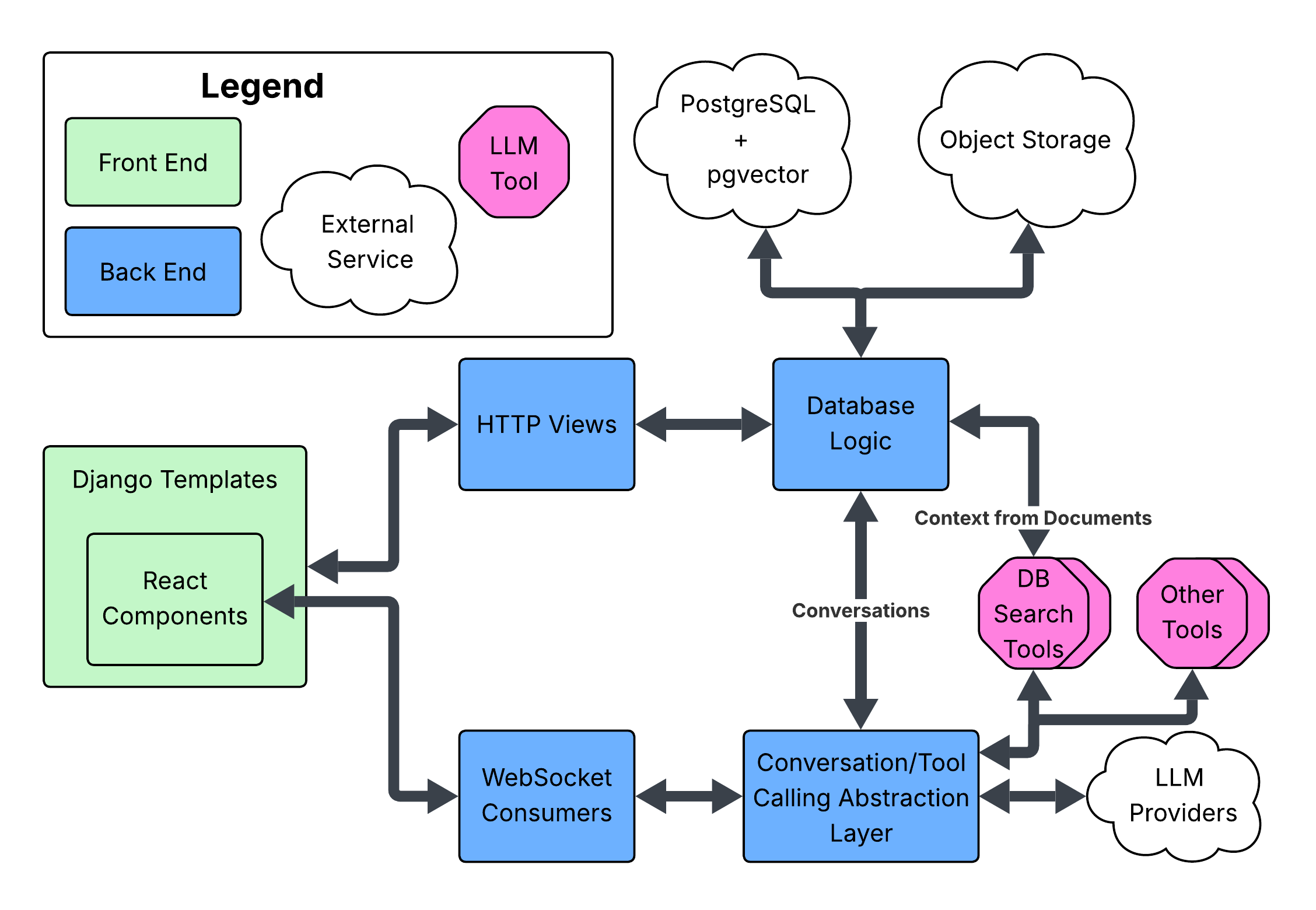

Architecture - AquiLLM is composed of a Django backend with custom LLM, tool calling and RAG integration logic, a front end composed of Django templates, many of which contain React components, and external services: the database, object storage, and LLM inference API. AquiLLM’s focus on easy deployment and maintenance for small teams drove a number of technical choices.

For more details about the implementations and initial findings, see our research paper: "AquiLLM: a RAG Tool for Capturing Tacit Knowledge in Research Groups" by Chandler Campbell, Bernie Boscoe, and Tuan Do for presentation at USRSE 2025.

Contributors and Collaborators

Principle Investigators

- Prof. Bernadette Boscoe (Southern Oregon University)

- Prof. Tuan Do (UCLA)

Developers

- Chandler Campbell (Lead Developer, Southern Oregon University)

- Jacob Nowack (Southern Oregon University)

Contributors

- Skyler Acosta (Southern Oregon University)

- Zhuo Chen (University of Washington)

- Kevin Donlon (Southern Oregon University)

- Elyjah Kiehne (Southern Oregon University)

- Jonathan Soriano (UCLA)

About the Name

AquiLLM is a combination of the words "Aquila," the constellation, and "LLM," which stands for Large Language Model. Aquila is one of the most prominent constellations in the northern sky. The name reflects our group's history in working with Astronomy and Astronomers.

Alfred P. Sloan Foundation

Alfred P. Sloan Foundation

National Science Foundation

National Science Foundation